Will AI Lead To Mass Unemployment

Navigating the Complex Future of Human-AI Interaction at work

Human beings are by nature quite insecure...

If the age of hunting and gathering taught humans anything at that time, it was probably to be ever vigilant and prepared for the unexpected. The peace of mind one has today is not guaranteed tomorrow, as any sense of contentment can get us killed when trying to survive in a precarious environment with unpredictable dangers lurking around every corner.

In order to stay relevant and manage to survive in the challenging world, we started to invent measures to minimize the uncertainties that we could encounter. We learned how to use fire as a solution to the insecurity of inclement weather and animal attacks; we began grazing in response to the unstable food supply caused by hunting; we initiated large-scale agriculture so that we wouldn't have to rely on luck to gather nutrients that supplement our diets.

The urgency and the agency to either adapt or address any uncertainty that could threaten our survival became a leitmotif throughout the rest of human civilization. It drove us to evolve and innovate for a better life, leading to great inventions such as electricity, the internal combustion engine, etc.

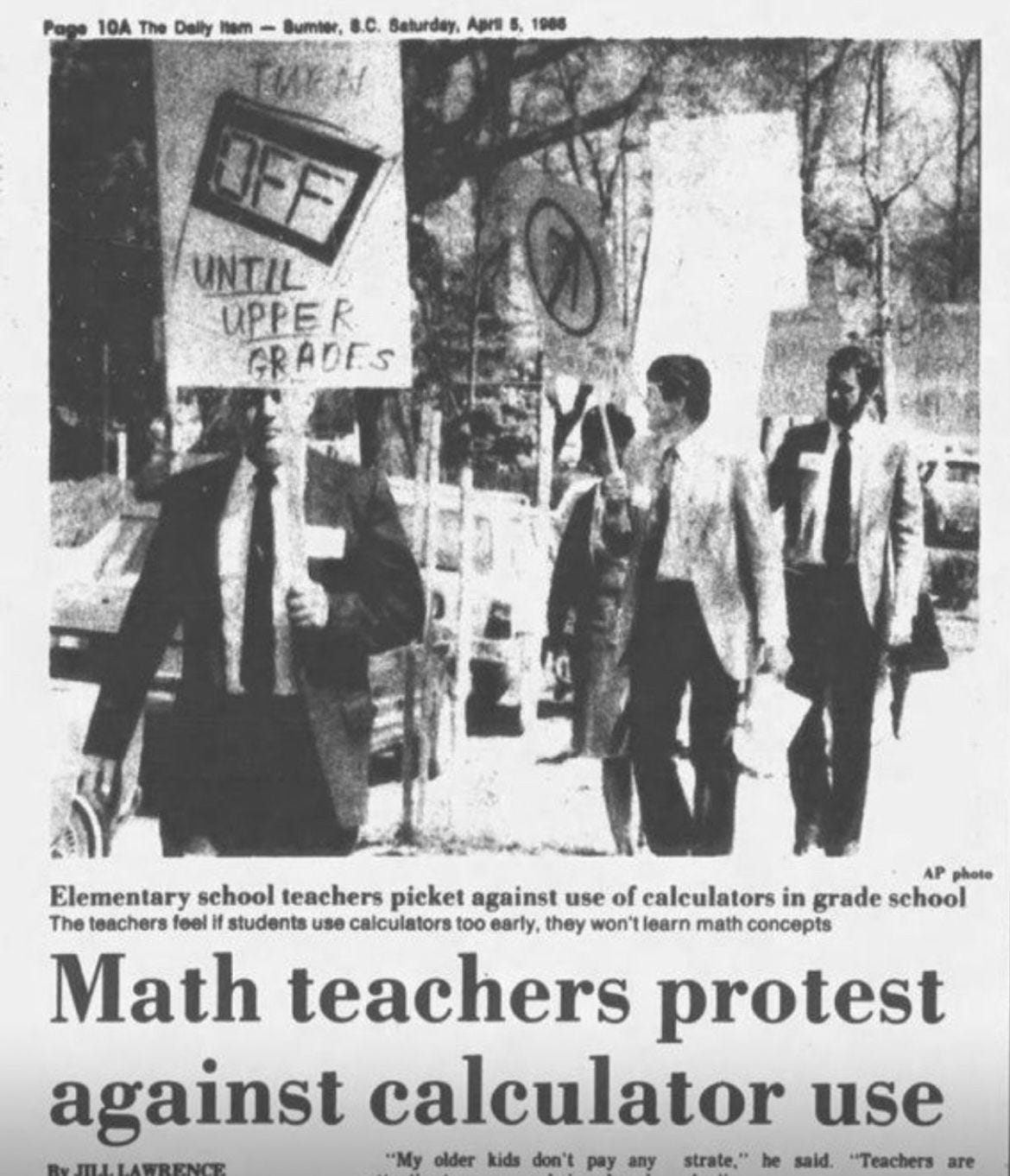

Despite the tremendous progress we have made in managing our inner insecurity, our attitude toward it has soured in recent days. The same insecurity that once prods us to adapt and continue to raise our standard of living seems to have become one of the main justifications we default to resist change. We are fraught when confronted with innovation and iconoclastic ideas, even some of which are our own creations, lest the "new thing" might disrupt the reality on which we depend to survive. For examples,

In the Industrial Revolution, workers went on strike against the introduction of new labor-saving machines

In the 1980s, math teachers protested over the use of calculators in elementary schools

Ironically, while many of us are convinced that each technology leap often ushers in a secular trend of economic and productivity growth, we just cannot help but think of the dystopian scenario where we all get displaced by some new automation technology.

Having recently been in awe of what AI-powered applications like ChatGPT are capable of doing, our inner sense of insecurity, quite self-evidently, starts to creep in again. Unlike the previous industrial innovations where blue-collar workers were the main victims, the rise of AI technology is seen as a major threat toward knowledge workers who tend to be the well-educated middle class.

Such fear is further fueled by media reports worrying that the technology is so powerful that it will eventually take over most of the jobs that are currently done by humans, leading to massive disruption of the workforce. Goldman Sachs' recent report about the impact of AI on economic growth predicts that two-thirds of occupations could be partially replaced by AI, with administrative, legal, architecture, and engineering jobs most likely to be automated.

While it is certainly true that the advent of AI will create a paradigm shift in how we work and what we do, I don't necessarily buy into the idea that it will make us obsolete. For one thing, there are still many hurdles that AI must overcome before it can truly cement itself as an omnipotent agent. More importantly, we have valued human input and interaction for so long that it seems very unlikely to me that the human-centered world will be upended overnight by AI. So rather than being pessimistic about AI cannibalizing our work, I think it will become an incredible complement to humans - saving us time, turbocharging our productivity, and freeing us up to focus on the very human things that we have always excelled at.

AI, specifically LLM, is a versatile general-purpose agent, with drawbacks to overcome

If you have ever played with ChatGPT, an interface of GPT3.5 (a Large Language Model (LLM) model that specializes in natural language processing), you have probably been amazed by the human-like responses it generates. Given an input prompt, it produces such logical and coherent prose that makes you wonder if all those years of education were a waste of time.

Yes, I get it. It is quite disconcerting to see LLMs performing most natural language tasks such as summarization, translation, answering factual questions, etc. on par with, if not better than, humans, but we shouldn't get too carried away and jump to the conclusion that this ostensibly almighty creation is destined to collapse the human society either.

My argument stems mostly from the fact that generative AI like LLMs hasn't reached a level of maturity that allows it to completely overthrow humans in the decision-making process, and a lot of the reason has to do with its foundational architecture (i.e., how it is trained to produce seemingly sentient outputs).

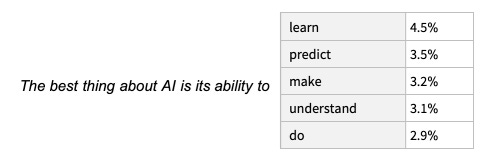

Despite ChatGPT's black magic-like abilities, how it works to produce under the hood is quite simple - it iteratively makes an educated guess as to what the next most contextually appropriate word will be, given the text it has already generated - this is also called next token prediction.

It is able to make an informed decision about the likelihood of the next words by virtually training on top of the entire Internet, learning billions of pages of human-written text, finding all instances of text with similar context, and then extrapolating which relevant word will come next.

Such revolutionary architecture is the backbone of LLM's superior performance, enabling it to have a longer memory for processing text as well as a better ability to reason. This, however, does not mean that AI is ready for large-scale deployment in the real world, as there are major shortcomings that make it less capable than one would expect.

The LLM's knowledge is very generalized and highly compressed, making it prone to hallucination

LLMs are still considered a black box, resulting in a terrible interface

Let's take these one at a time.

LLM is a reasoning machine but not a knowledge expert

Hallucination, the tendency of LLMs to spew out facts that are completely false or nonexistent, is one of the biggest roadblocks standing in the way of it becoming a truly trusted source of information.

LLMs are only good at making decisions based on the information you give them. So if it is not given enough context about the subject of a request, it will struggle to connect the right dots between all the relevant information. One way to resolve this issue is to feed the model with more context through prompting. An example of these techniques is called “chain of thought”, which grounds the model in a specific context by providing examples of what you want it to accomplish before you formally make requests.

Providing additional context, however, is not always a panacea, as there is a bigger problem contingent on hallucination that still needs to be addressed - its lossy, overly generalized, and limited knowledge base.

Virtually all of the knowledge that a language model possesses comes from the Internet. Despite its expansiveness, we can all agree that the Web contains only a fraction of human intelligence. As a result, LLMs tend to have a tough time providing credible answers to subjects that are generally not available on the Internet, such as mathematics and natural physics (GPT-4 has made great progress, but other LLMs still have trouble solving math problems).

Still, it is unlikely that the model could imbibe all the information available on the web both for memory and cost considerations, so it would need to compress the knowledge it gains from the training data to a reasonable size. And now concerns are being raised about whether the compression done by the model could distort the semantic meaning of the original source.

Ted Chiang, a science fiction writer who is famous for his short story "Story of Your Life," likens the LLM to a heavily compressed image that is ostensibly seen as an accurate representation of the original, but the details are mostly distorted when zoomed in.

Think of ChatGPT as a blurry JPEG of all the text on the Web. It retains much of the information on the Web, in the same way that a JPEG retains much of the information of a higher-resolution image, but, if you’re looking for an exact sequence of bits, you won’t find it; all you will ever get is an approximation.

The lack of nuance in LLMs' knowledge base may not be a huge problem in and of itself - as long as we are aware of its existence, we can still have discretion on whether to treat it as a credible source. But what makes LLMs special, and potentially dangerous, is their ability to fable with extreme confidence and conviction, which can trick people into believing that they capture 100% of the original source when they do not. And we have begun to see AI-fabricated text surface in the real world, negatively impacting the legitimacy of professional services.

What's more, as more and more AI-generated text appears on the Internet and is used to train the next generation AI, the entire Internet starts to become a fuzzy version of itself and a less desirable source for training new models. With the rapid depletion of high-quality training data and the exponential growth of synthetic data online, we may see the quality of AI-generated text reach an asymptote and even begin to degrade.

AI is a terrible interface

Aside from the propensity to hallucinate, another fundamental problem with LLMs is that we do not yet have a solid grasp of how it actually works internally, despite some progress made in explaining the underlying construct of language models. Such limited understanding makes LLM a quasi black box - we have no idea how changes in one part of the input will cause which part of the output to alter.

For clarification, there are still ways for us to exert control over the intended output. First off, we can tweak parameters on the backend, adjusting settings, like the "temperature" that determines the randomness of the output, to customize the model's sentiment and creativity in producing the output. Setting high-level constraints in prompts also helps tailor the model's response (i.e., it will generate text with different tones depending on the role you want it to act). However, there is a limit to how effective these techniques are, and we typically don't have a uniform expectation for the model's response to any given input.

Ethan Mollick, a professor at Wharton, did an interesting experiment to test how GPT-4's response would change based on whether it was prompted to act as a genius writer or a great writer, or just a writer. According to his Twitter poll, the effect of adding that extra adjective on the quality of the output seems to be trivial.

Because of the disconnected feedback loop, we are unable to establish a conceptual model to predict how LLMs would behave given an input, making it a terrible interface to interact with. The lack of transparency and predictability will force users into using trial-and-error, hence creating a steeper learning curve for a layperson to utilize the tool effectively.

Some human-specific qualities will never be replaced by AI

That said, with the lighting progress of research on AI, I am confident that the aforementioned drawbacks an agent possessed will eventually be resolved by some next generation models, and the day when AI's intelligence surpasses human counterparts may not be that far off. However, does that mean human workers will be then overtaken by agents completely? My sense is probably no, and the major reason is that humans still maintain one big advantage over AI - the fact that the interaction with and the input from our peers is still a quintessential part of how our society works.

Human is a better interface than AI

If we were to score how good humans are as interfaces, we would probably end up at the lower end of the spectrum. We too often are short of a conceptual model that can precisely predict how another human will interpret and respond to our natural language. This is why conflict and miscommunication are ubiquitous in our everyday life.

Nonetheless, our conceptual models of human interaction are often still better (more predictive) than AI black boxes, for two main reasons. First, humans' pre-existing knowledge of the world offers a general framework for establishing common ground. Each person has a unique life journey that shapes his or her distinct views of the world, resulting in today's diverse and rich humanity. But underneath the diversity, there is also another knowledge layer, built off of the shared values of the social apparatus. Based on such a strong prior, we would expect others to act similarly to how we would behave on certain things, which helps to reduce friction and facilitate interaction between people of different demographic and cultural heritages.

Even if there is a misalignment, unlike AI that goes straight into hallucination mode, back-and-forth interactions, and feedback almost always help us reach a consensus in the end. It is perfectly normal for human collaborators not to have a full grasp of what others are saying at the beginning of a conversation, but we would use various repair strategies, such as asking clarifying questions, speaking ideas out loud, sharing common semantics, etc., to fix ambiguities and clear up misunderstandings. Not to mention that working with people is itself a "context-heavy" art that requires as much "instinct" and "experience" as knowledge of the specific subjects. For instance, few job descriptions would include "stakeholder management" as a must-have requirement, but in reality, it is probably a significant part of many jobs. Such abstract capabilities are nowhere to be found on the Internet, nor can they be learned directly, hence there will remain lots and lots of tasks that human beings can perform better than an agent.

Human interaction also serves as a vehicle for emotional support

Barring being a necessity for professional success, human interaction plays a critical role in improving people's physical and emotional well-being. As a gregarious species, we value relationships and derive energy from spending time together. Think about how often our days are brightened by small human touches through real exchanges, like sincere eye contact, a friendly smile, or a burst of laughter, while how annoying it is to be served by an artificial agent when you call customer support.

"A doctor I know once sneaked a beer to a terminally ill patient, to give him something he could savor in a process otherwise devoid of pleasure. It was an idea that didn’t appear in any clinical playbook, and that went beyond words—a simple, human gesture." - A simple human gesture can make a huge differnce

But now, imagine a world where all that human work is replaced by autonomous robots. Would you have as much joy interacting with agents as you once did with humans? Sadly, it seems that we are nevertheless heading down this path with little regret. With the proliferation of the Internet and social media, people, especially teenagers, are more socially isolated than ever, contributing to the rising number of unhappy individuals and suicide rates. What's more concerning is that the use of automation technology like AI may exacerbate the inveterate disparity in our society. Human interaction, which should be universally available to all people, is being monetized as a form of luxury as businesses use privileges like concierge or VIP phone lines to court affluent individuals. Employers, rushing to jump on the AI bandwagon, have begun planning to rule out existing job functions that could be replaced by AI (very much sounds like a pretext to cut jobs in today's high interest rate environment).

This is not to say that businesses should resist any form of automation and suddenly turn themselves into a non-profit. Instead, companies, especially those in the direct-to-consumer space such as healthcare and hospitality, should strike a balance between hiring human workers and deploying autonomous technology. Rather than fixating on reducing headcount for the sake of sheer efficiency, they should be mindful of the impact of human interaction on both employee morale internally and customer satisfaction externally.

Envisioning a future where AI coexists with human workers harmoniously

So, if workers are not going to be overtaken by AI, how will AI be positioned to benefit humanity in the foreseeable future then? My answer is straightforward - AI will make everyone a better, more productive worker by taking over all the drudgery work that we are neither fond of nor great at doing.

While the creation of the Internet has made information more accessible than ever before, it has also led to an explosion in the amount of data available today, which comes at the cost of limiting people's ability to focus and think strategically.

Although the human mind is a salient tool for generating ideas and solving complex problems, it is not designed to store or process massive amounts of data. But with social media, online advertising, and sponsored content all competing for web traffic and users' attention, it has become virtually impossible for the unaided human mind to cut through the noise. As a result, we constantly find ourselves spending the majority of our time manually searching for and processing information, leaving little capacity to actually do the things that we typically excel at - thinking, connecting, and creating. In fact, a Microsoft work trend survey found that 62% of knowledge workers struggle with spending too much time searching for information, and the vast majority of them complain about not having enough uninterrupted focus time during work, highlighting the excessive information overload we all share nowadays.

Fortunately, such productivity headaches can be dramatically alleviated with the advent of AI, and in particular, LLM-powered technology. AI is at its best when it copes with incremental tasks that progress by adding one word or sentence at a time. Such tasks, like taking meeting notes, drafting emails, or summarizing research articles, which are typically the least preferred work for humans, are some of the most effective use cases for AI.

That is why I vehemently believe that AI is a complement, not a replacement, for human work, especially for knowledge workers, who have been touted by the media as the group that is most likely to be disrupted. Instead of directly substituting for human judgment in decision making, I envision AI playing the role of emissary between the knowledge layer and the judgment layer, retrieving and processing the information that is most pertinent to our work (to ensure accuracy and reduce hallucination, we would need to refine its knowledge base with contextual data like Enterprise proprietary materials or personal documents).

Ultimately, no matter how much foothold the narrative of AI-driven mass unemployment gains, most human talent is here to stay for the foreseeable future. And despite some of the aforementioned drawbacks, AI's unique capabilities will reinvigorate humanity's innate creativity and stagnant productivity, not only embarking on the next stage of technological revolution and economic growth but most importantly allowing us to stay off menial tasks and spend more time interacting with our human companions.